Meta (formerly known as Facebook) has recently launched the Llama 3 large language model. This advanced model comes in two versions: an eight-billion (8B) parameter version and a seventy-billion (70B) parameter version.

Llama 3 is a powerful large language model that currently powers Meta AI (an intelligent assistant), and the official page mentions that this model is trained on Meta’s latest custom-built 24K GPU clusters, using over 15T tokens of data.

The 8B parameter version of Llama 3 has a knowledge cutoff date of March 2023, whereas the 70B version extends to December 2023, thus resulting in a real-world information discrepancy between the two.

Today, I’ll show you how to locally run the 8B parameter version of Llama 3 on Linux, a more feasible option for standard desktops or laptops that may struggle with the larger 70B version.

Tutorial Details

| Description | Meta Llama 3 |

| Difficulty Level | Low |

| Root or Sudo Privileges | No |

| OS Compatibility | Ubuntu, Manjaro, Fedora, etc. |

| Prerequisites | – |

| Internet Required | Yes (for downloading the model) |

How to Run Meta’s Llama 3 on Linux

To run Meta’s Llama 3 on Linux, we’ll use the LM Studio (a GUI application for searching, downloading, and running local LLMs). The chosen 8B parameter version is approximately 8.54 GB in size (70B is approximately 42.52 GB in size).

I’m going to set up Meta Llama 3 on my Ubuntu system, but if you’re using a different Linux distribution such as Debian, Fedora, Redhat, OpenSUSE, or Arch, then you can also run it without any issues by following this tutorial. So, let’s begin with…

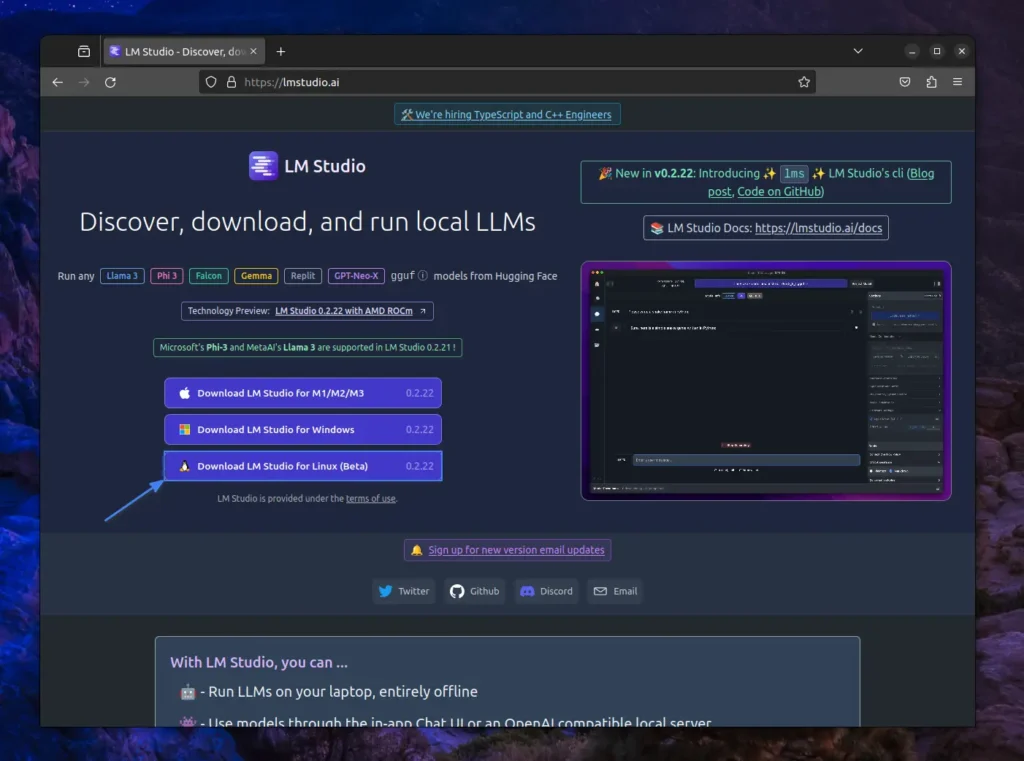

1. Visit lmstudio.ai and download the appropriate LM Studio version for your system. For Linux, it’s currently available as an AppImage in Beta, but rest assured, I’ve tried and tested it on multiple Linux systems, and it works as stable as one could hope for.

2. Once the AppImage is downloaded into your Download directory, open your terminal and move to the “~/Downloads/” location using the following command:

$ cd ~/Downloads/Output:

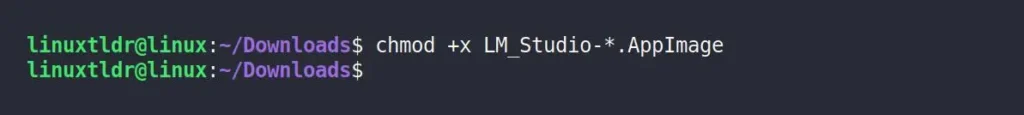

3. To run the LM Studio AppImage on Linux, you need to assign executable permission using the chmod command because it’s not assigned by default.

$ chmod +x LM_Studio-*.AppImageOutput:

4. Once done, you can launch the LM studio using its relative path.

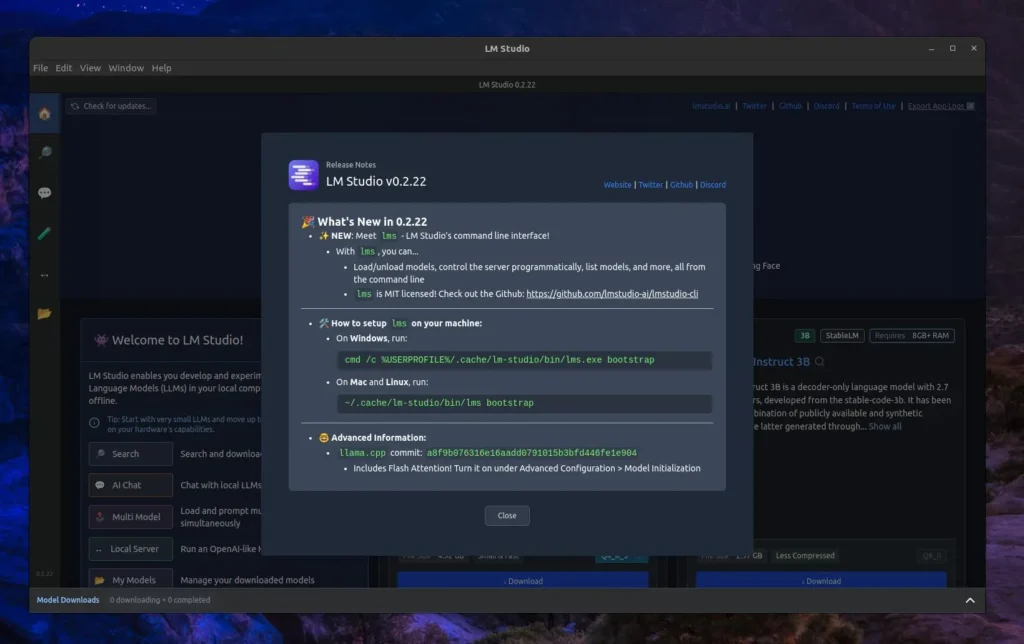

$ ./LM_Studio-*.AppImageUpon launching it, the following LM Studio window will appear, with the “Release Notes” prompt that you can hide by clicking on the “Close” button.

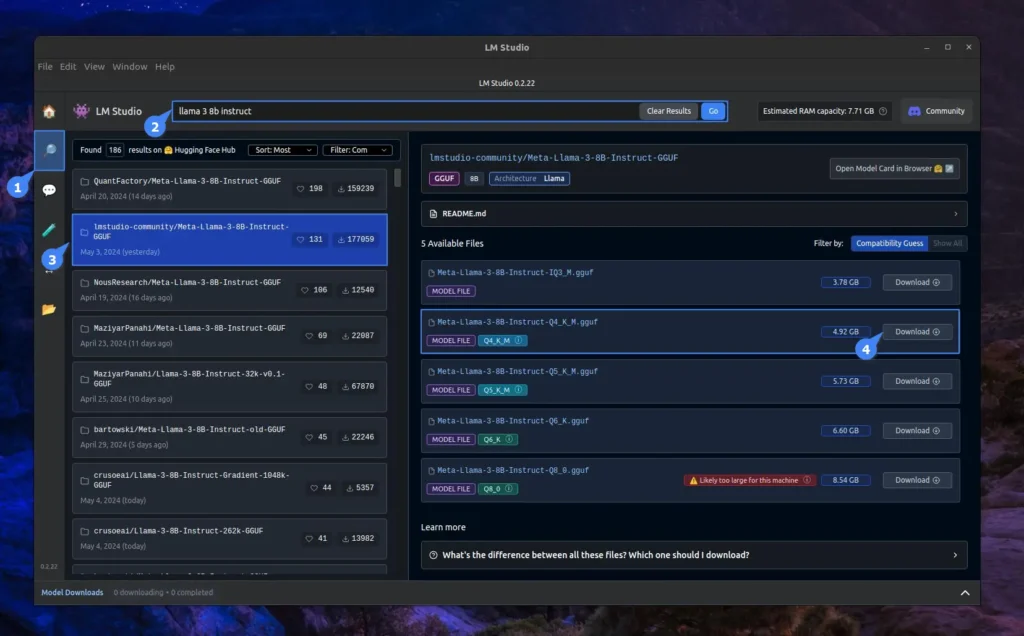

5. To use Meta’s Llama 3 8B parameter version, you must first download the model by navigating to “search“, searching for “llama 3 8b instruct“, locating “lmstudio-community/Meta-Llama-3-8B-Instruct-GGUF” on the left side, and then downloading your desired model (for example, “Meta-Llama-3-8B-Instruct-Q4_K_M.gguf“) from the right side.

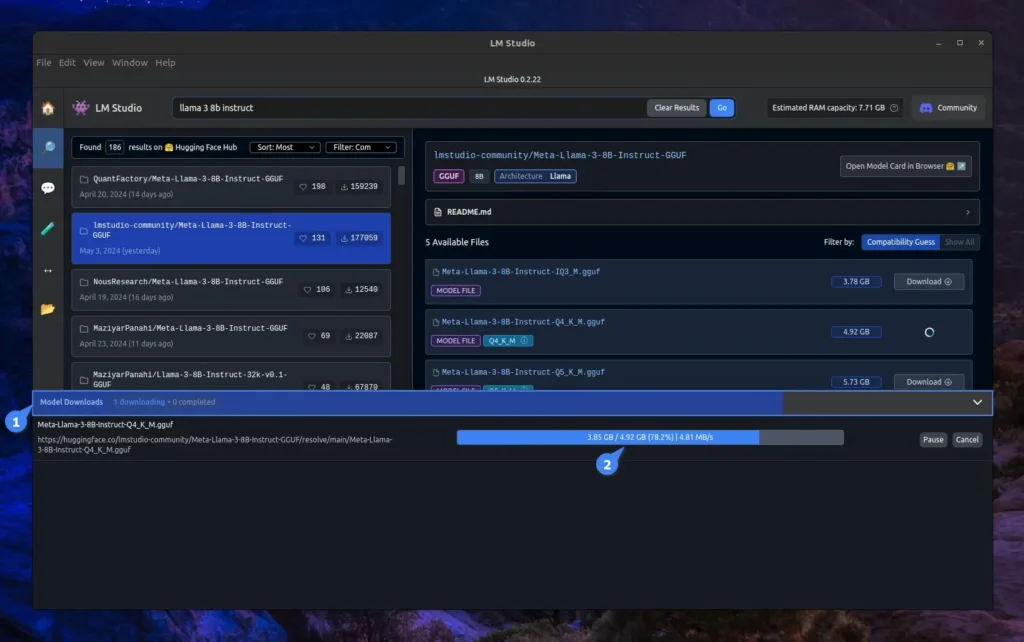

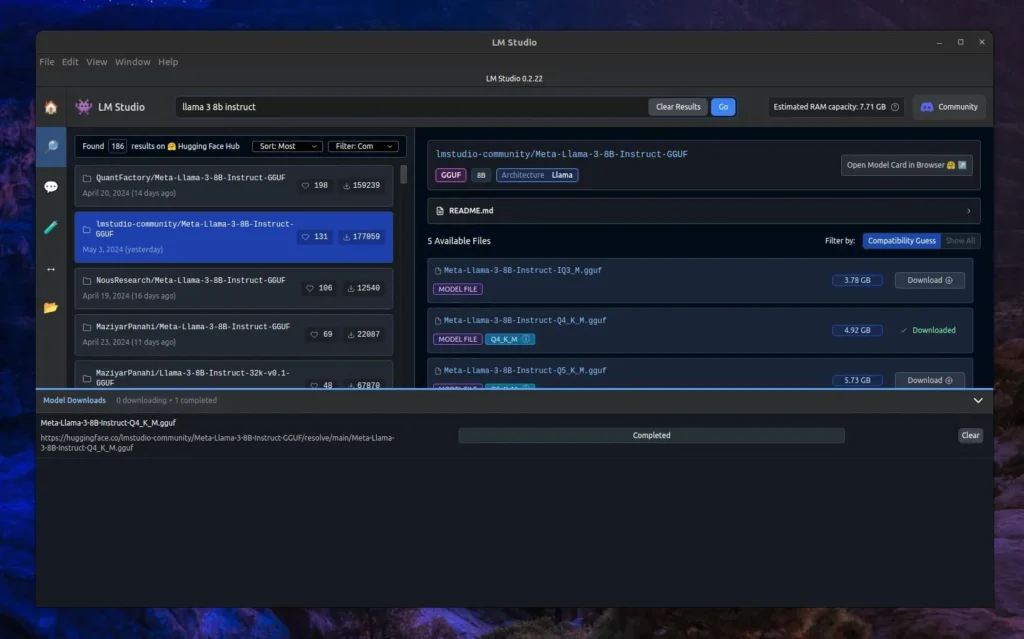

6. Once the download begins, it will take some time to complete, depending on your internet speed. To check the progress, you can click on the “Model Downloads” panel in the footer.

7. So, give it some time to download the model and check its integrity.

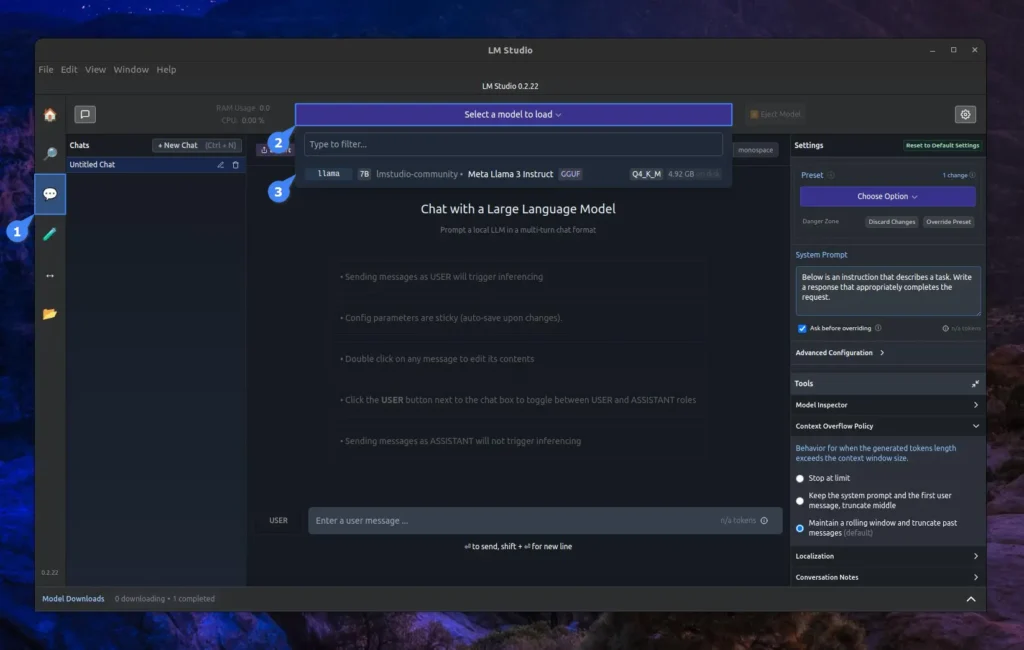

8. Once Llama 3 is installed, click on the “AI Chat” icon on the left-hand vertical bar within the LM Studio. This will open a chat interface similar to ChatGPT. Here, you need to click on “Select a model to load” at the top of the page, then choose the Llama 3 LLM you just downloaded. LM Studio will load the model, which may take a few seconds.

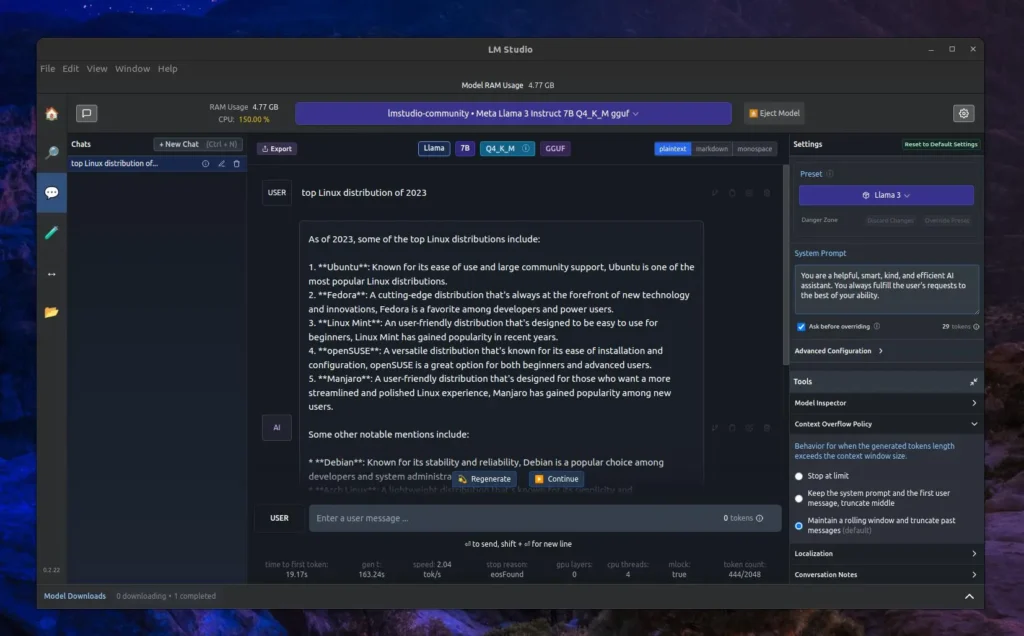

9. Once model loading is complete, you can then chat with Llama 3 on your Linux machine. Here, I’ve asked a simple question: “What’s the top Linux distribution for 2023?“.

The response time was a bit slow compared to other large language models, but it’s mostly because I have an RTX 3050 GPU with 16 GB of RAM. If you have good specs, the response time will be faster.

Currently, it lacks image generation capabilities, and while its information accuracy surpasses that of the previous Meta Llama 2, there’s a noted deficiency in clarity and content depth, which are crucial for extensive large language models.

As of now, I lack a sufficient system to review the Meta Llama 3 70B model, but if you do, give it a try and share your experience in the comments. You can also post your questions or queries there.

Here, I’ll end this article. Before leaving, I would suggest you check out this amazing article on Uh-Halp: An AI-Powered Command-Line Helper for Linux.

Till then, peace!